Mani:

Hello,

Anyone worked on gce disks to create PV and storage classes. I got stuck in below problem pls suggest an idea

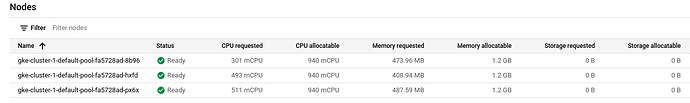

I’m trying to create a simple deployment with 3 replicas and have configured it to test storage on cloud. Once i create pvc and map it to deployment, disk getting created dynamically(with help of storage class) on one particular zone in gcp and automatically attached to a worker node instance in the same zone. My problem is all the pods from deployment get scheduled to same node where disk is attached, i wanted to know how to create a disk across the cluster or create centrally for the pods of a deployment from different node can read & write.

unnivkn:

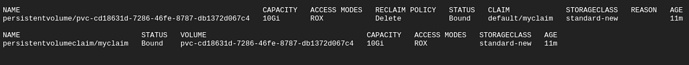

Hi Mani… check you PV access mode, it might be ReadWriteOnce. update it to ReadOnlyMany or ReadWriteMany.

ReadWriteOnce: The volume can be mounted as read-write by a single node.

ReadOnlyMany: The volume can be mounted read-only by many nodes.

ReadWriteMany: The volume can be mounted as read-write by many nodes. PersistentVolume resources that are backed by Compute Engine persistent disks don’t support this access mode.

Mani:

Hi @unnivkn, Thank you for ur suggestion, I tried it by using ReadOnlyMany, but still pods get scheduled on single node where disk is mounted. here i’m using 3node cluster

unnivkn:

Please delete the pvc & create it with correct access mode again. and confirm both pv & pvc have the same accessmode

Mani:

Yes, i tried those on a new cluster. Anyways pls suggest anyother provisioner i can use to store data from pods across cluster? And in production what type of storage will be implemented?

Mani:

Great, Thanks!! will check this

unnivkn:

you welcome