Hello,

I was trying to play game of pods but i get the following events when i schedule mysql pod.

Failed to pull image “mysql:5.7”: rpc error: code = Unknown desc = Error response from daemon: toomanyrequests:

Full event logUnderstanding Your Docker Hub Rate Limit | Docker

tgp

December 14, 2020, 2:35am

#2

could you please try again and let us know the issue still persists?

Hello,

I am currently having the issue on the voting app… That’s kind of a joke to have such issue(s) while training for CKAD xD

I don’t know if it’s down for today or if I can try again in 10mins…

Deployment

controlplane $ kubectl describe --namespace=vote deployments.apps worker

Name: worker

Namespace: vote

CreationTimestamp: Fri, 30 Jul 2021 13:13:20 +0000

Labels: name=worker

Annotations: deployment.kubernetes.io/revision: 1

kubectl.kubernetes.io/last-applied-configuration:

{"apiVersion":"apps/v1","kind":"Deployment","metadata":{"annotations":{},"labels":{"name":"worker"},"name":"worker","namespace":"vote"},"s...

Selector: app=worker

Replicas: 1 desired | 1 updated | 1 total | 0 available | 1 unavailable

StrategyType: RollingUpdate

MinReadySeconds: 0

RollingUpdateStrategy: 25% max unavailable, 25% max surge

Pod Template:

Labels: app=worker

Containers:

worker:

Image: kodekloud/examplevotingapp_worker

Port: 80/TCP

Host Port: 0/TCP

Environment: <none>

Mounts: <none>

Volumes: <none>

Conditions:

Type Status Reason

---- ------ ------

Progressing True NewReplicaSetAvailable

Available False MinimumReplicasUnavailable

OldReplicaSets: <none>

NewReplicaSet: worker-67b6657 (1/1 replicas created)

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal ScalingReplicaSet 16m deployment-controller Scaled up replica set worker-67b6657 to 1

controlplane $ kubectl describe --namespace=vote pod

poddisruptionbudgets.policy pods podsecuritypolicies.extensions podsecuritypolicies.policy podtemplates

controlplane $ kubectl describe --namespace=vote pods

pods podsecuritypolicies.extensions podsecuritypolicies.policy

controlplane $ kubectl describe --namespace=vote pods

pods podsecuritypolicies.extensions podsecuritypolicies.policy

controlplane $ kubectl describe --namespace=vote pods

db-deployment-8f8fd4c4f-6vvcn result-deployment-67fd7d7ddc-79sjc vote-deployment-7d56b78898-r48vn

redis-deployment-f9858f4bc-7crxn result-deployment-859bf98d54-2tgsp worker-67b6657-vw6gx

Pod

controlplane $ kubectl describe --namespace=vote pods worker-67b6657-vw6gx

Name: worker-67b6657-vw6gx

Namespace: vote

Priority: 0

PriorityClassName: <none>

Node: node01/172.17.0.32

Start Time: Fri, 30 Jul 2021 13:13:20 +0000

Labels: app=worker

pod-template-hash=67b6657

Annotations: <none>

Status: Running

IP: 10.32.0.195

Controlled By: ReplicaSet/worker-67b6657

Containers:

worker:

Container ID: docker://f1bd8450098c37abcc73b5857177eef5e30742bc3aa0ea97cb7ecc6c6164316c

Image: kodekloud/examplevotingapp_worker

Image ID: docker-pullable://kodekloud/examplevotingapp_worker@sha256:55753a7b7872d3e2eb47f146c53899c41dcbe259d54e24b3da730b9acbff50a1

Port: 80/TCP

Host Port: 0/TCP

State: Waiting

Reason: ImagePullBackOff

Last State: Terminated

Reason: Error

Exit Code: 1

Started: Fri, 30 Jul 2021 13:14:49 +0000

Finished: Fri, 30 Jul 2021 13:15:05 +0000

Ready: False

Restart Count: 2

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from default-token-rfnn8 (ro)

Conditions:

Type Status

Initialized True

Ready False

ContainersReady False

PodScheduled True

Volumes:

default-token-rfnn8:

Type: Secret (a volume populated by a Secret)

SecretName: default-token-rfnn8

Optional: false

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute for 300s

node.kubernetes.io/unreachable:NoExecute for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 17m default-scheduler Successfully assigned vote/worker-67b6657-vw6gx to node01

Normal Pulled 15m (x3 over 16m) kubelet, node01 Successfully pulled image "kodekloud/examplevotingapp_worker"

Normal Created 15m (x3 over 16m) kubelet, node01 Created container worker

Normal Started 15m (x3 over 16m) kubelet, node01 Started container worker

Warning Failed 14m (x2 over 14m) kubelet, node01 Failed to pull image "kodekloud/examplevotingapp_worker": rpc error: code = Unknown desc = Error response from daemon: toomanyrequests: You have reached your pull rate limit. You may increase the limit by authenticating and upgrading: https://www.docker.com/increase-rate-limit

Normal Pulling 14m (x6 over 17m) kubelet, node01 Pulling image "kodekloud/examplevotingapp_worker"

Warning Failed 14m (x3 over 14m) kubelet, node01 Error: ErrImagePull

Warning BackOff 12m (x12 over 16m) kubelet, node01 Back-off restarting failed container

Normal BackOff 7m5s (x14 over 10m) kubelet, node01 Back-off pulling image "kodekloud/examplevotingapp_worker"

Warning Failed 2m12s (x35 over 10m) kubelet, node01 Error: ImagePullBackOff

Max

Hello,

Same issue for me with example-voting-app on GCP :

Name: worker-app-deploy-56f699bdd5-87xbr

Namespace: default

Priority: 0

Service Account: default

Node: gke-example-voting-app-default-pool-505f8919-pfnj/10.128.0.4

Start Time: Mon, 12 Dec 2022 11:13:03 +0000

Labels: app=demo-voting-app

name=worker-app-pod

pod-template-hash=56f699bdd5

Annotations: <none>

Status: Running

IP: 10.68.1.11

IPs:

IP: 10.68.1.11

Controlled By: ReplicaSet/worker-app-deploy-56f699bdd5

Containers:

worker-app:

Container ID: containerd://aaaa8433303309c682b8b2ab2f2f6f38dfe553c8b1f3cb612fbc45768671336c

Image: kodekloud/examplevotingapp_worker:v1

Image ID: docker.io/kodekloud/examplevotingapp_worker@sha256:741e3aaaa812af72ce0c7fc5889ba31c3f90c79e650c2cb31807fffc60622263

Port: <none>

Host Port: <none>

State: Waiting

Reason: CrashLoopBackOff

Last State: Terminated

Reason: Error

Exit Code: 1

Started: Mon, 12 Dec 2022 11:14:31 +0000

Finished: Mon, 12 Dec 2022 11:14:31 +0000

Ready: False

Restart Count: 4

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-nknq7 (ro)

Conditions:

Type Status

yg_herve@cloudshell:~/example-voting-app/k8s-specifications (thermal-micron-371410)$ kubectl describe pod worker-app-deploy

Name: worker-app-deploy-56f699bdd5-87xbr

Namespace: default

Priority: 0

Service Account: default

Node: gke-example-voting-app-default-pool-505f8919-pfnj/10.128.0.4

Start Time: Mon, 12 Dec 2022 11:13:03 +0000

Labels: app=demo-voting-app

name=worker-app-pod

pod-template-hash=56f699bdd5

Annotations: <none>

Status: Running

IP: 10.68.1.11

IPs:

IP: 10.68.1.11

Controlled By: ReplicaSet/worker-app-deploy-56f699bdd5

Containers:

worker-app:

Container ID: containerd://aaaa8433303309c682b8b2ab2f2f6f38dfe553c8b1f3cb612fbc45768671336c

Image: kodekloud/examplevotingapp_worker:v1

Image ID: docker.io/kodekloud/examplevotingapp_worker@sha256:741e3aaaa812af72ce0c7fc5889ba31c3f90c79e650c2cb31807fffc60622263

Port: <none>

Host Port: <none>

State: Waiting

Reason: CrashLoopBackOff

Last State: Terminated

Reason: Error

Exit Code: 1

Started: Mon, 12 Dec 2022 11:14:31 +0000

Finished: Mon, 12 Dec 2022 11:14:31 +0000

Ready: False

Restart Count: 4

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-nknq7 (ro)

Conditions:

Type Status

Initialized True

Ready False

ContainersReady False

PodScheduled True

Volumes:

kube-api-access-nknq7:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

ConfigMapOptional: <nil>

DownwardAPI: true

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 2m35s default-scheduler Successfully assigned default/worker-app-deploy-56f699bdd5-87xbr to gke-example-voting-app-default-pool-505f8919-pfnj

Normal Pulled 67s (x5 over 2m35s) kubelet Container image "kodekloud/examplevotingapp_worker:v1" already present on machine

Normal Created 67s (x5 over 2m35s) kubelet Created container worker-app

Normal Started 67s (x5 over 2m35s) kubelet Started container worker-app

Warning BackOff 38s (x10 over 2m32s) kubelet Back-off restarting failed container

Ayman

December 12, 2022, 12:16pm

#5

Hello @yg.herve

Note: We will create deployments again so please before following the steps, Run kubectl delete deployment --all to delete old deployments and avoid any conflicts.

Run git clone https://github.com/mmumshad/example-voting-app-kubernetes-v2.git

Run cd example-voting-app-kubernetes-v2/

Run vim postgres-deployment.yml and modify it’s content as below then save and exit.

Note: It’s a new update from Postgres.

apiVersion: apps/v1

kind: Deployment

metadata:

name: postgres-deployment

labels:

app: demo-voting-app

spec:

replicas: 1

selector:

matchLabels:

name: postgres-pod

app: demo-voting-app

template:

metadata:

name: postgres-pod

labels:

name: postgres-pod

app: demo-voting-app

spec:

containers:

- name: postgres

image: postgres:9.4

env:

- name: POSTGRES_USER

value: postgres

- name: POSTGRES_PASSWORD

value: postgres

- name: POSTGRES_HOST_AUTH_METHOD

value: trust

ports:

- containerPort: 5432

Run kubectl create -f . if you create deployments for the first time, if you created the same deployments before Run kubectl apply -f . .

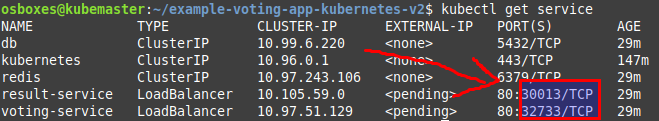

Run kubectl get service to get the exposed ports.

For example if the output of the command as above you can accces the voting app by hitting One_of_the_worker_nodes_IP:32733 on your browser and the same for the resulting app >> One_of_the_worker_nodes_IP:30013.

Check :

Note: The voting application only accepts one vote per client. It does not register votes if a vote has already been submitted from a client.

Hope this helps!